Now think for a moment about how arithmetic coding works.

We have essentially "output" the bit sequence 10. As you can see, this is the "old" state with the sequence 10 added to the end. The new state is 46, which is 101110 in binary. So let's start with the state 11 (which is 1011 in binary), encode the symbol B. You can think of encoding as a function which takes the current state, and a symbol to encode, and returns the new state: Suppose our state is a large integer, which we will call $s$.

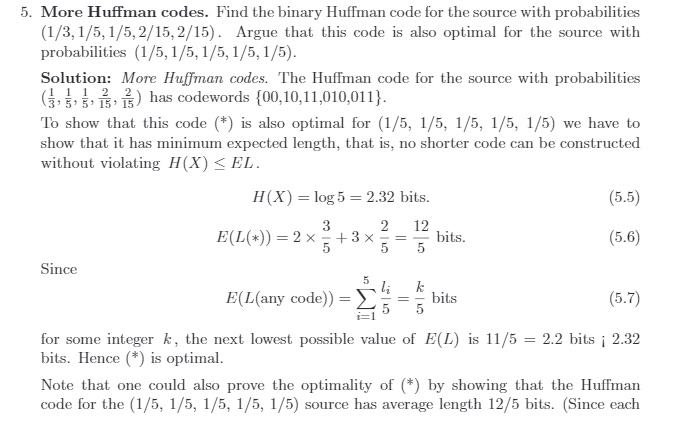

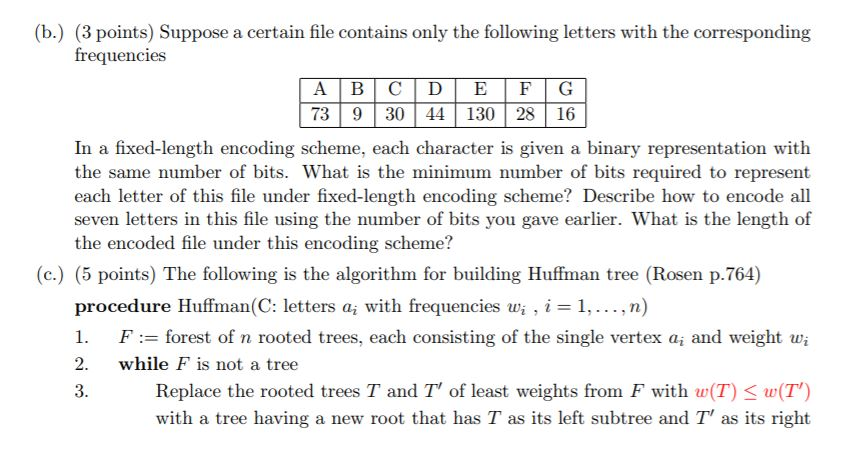

#Betterzip huffman code code

We will use the canonical code 0, 10, 11 for this example. Because the probabilities are all inverse powers of two, this has a Huffman code which is optimal (i.e. Suppose you have an alphabet of three symbols, A, B, and C, with probabilities 0.5, 0.25, and 0.25. Let's look at a slightly different way of thinking about Huffman coding. The question is, are there any papers or solutions to improve on huffman coding with a similar idea to fractional-bit-packing to achieve something similar to arithmetic coding? (or any results to the contrary). However, you can't just use the solution of fracitonal-bit-packing, because this solution assumes fixed sized symbols. The problem is similar with Huffman coding: you end up with codes that must be non-fractionally-bit-sized in length, and therefore it has this packing inefficiency. Just like multiplication of powers-of-two are shifting x * 2 = x << 1 and x * 4 = x << 2 and so on for all powers of two, so too you can "shift" with a non-power-of-2 by multiplying instead, and pack in fractional-bit-sized symbols. A solution to this problem is something I've seen referred to as "fractional bit packing", where you are able "bitshift" by a non-power of two using multiplication. That is, suppose you have 240 possible values for a symbol, and needed to encode this into bits, you would be stuck with 8 bits per symbol, even though you do not need a "full" 8, as 8 can express 256 possible values per symbol. In trying to understand the relationships between Huffman Coding, Arithmetic Coding, and Range Coding, I began to think of the shortcomings of Huffman coding to be related to the problem of fractional bit-packing.

0 kommentar(er)

0 kommentar(er)